MCP in Networking: Guide to Model Context Protocol

Table of Contents

- Introduction

- The NxM Integration Problem

- How the Model Context Protocol Works

- Applications of MCP in Networking

- EyeOTmonitor and the Promise of MCP

- Benefits and Challenges of MCP

- Future Outlook

- Conclusion and Key Takeaways

Introduction

MCP in networking is poised to transform how networks are managed. For decades, most IT teams have relied on Simple Network Management Protocol (SNMP) for pulling statistics from devices and command‑line scripts for configuration changes. Meanwhile, generative AI and large language models (LLMs) are reshaping every other sector. What if you could ask an AI assistant: “How many routers in our Chicago region are running outdated firmware?”and receive a complete report drawn from your monitoring system, without copying and pasting data?

That is the promise of the Model Context Protocol (MCP). Introduced by Anthropic in late 2024, MCP is an open‑standard framework that allows AI models like Claude or ChatGPT to interact with external tools and data sources en.wikipedia.org. It standardizes how functions are described and invoked, eliminating the need to build bespoke connectors for every combination of AI model and tool descope.com. In essence, MCP is to AI applications what USB‑C is to laptops: a universal interface that plugs into everything.

In this guide, we’ll explore why MCP matters for networking, how it works under the hood, and how it can unlock powerful automation and insight across your infrastructure. We’ll also draw on a real‑world test conducted by EyeOTmonitor, a cloud‑based network monitoring platform, which demonstrated how MCP can automate device queries through the workflow engine n8n and deliver human‑readable reports via Claude Desktop. By the end, you’ll understand not only the technology but also how to start applying it to your own network environment.

The NxM Integration Problem

Before MCP, integrating AI assistants with enterprise systems was messy and brittle. Imagine you have N different AI models (ChatGPT, Claude, Gemini, etc.) and M different tools or data sources (network monitoring, ticketing systems, CRM, etc.). Building a direct integration for each pair results in N × M separate connectors. Each integration must define its own function schemas, implement handlers, and handle model‑specific quirks descope.com. Maintenance becomes a nightmare: a minor API update or a new AI model means rewriting code.

MCP addresses this by creating a universal language for function calls. Developers describe available functions in a standard way, and any MCP‑compliant AI client can discover and call those functions without custom glue code. The protocol also provides a consistent handshake: when an MCP client (like Claude Desktop) starts up, it asks each connected server “what can you do?” and registers those capabilities descope.com. This drastically reduces redundant development and avoids the “copy‑and‑paste tango” users perform when transferring data between apps descope.com.

The NxM problem is especially acute in networking because of the diversity of devices and management tools. A network engineer might use SNMP for monitoring, NETCONF for configuration, proprietary APIs for vendor‑specific operations, and spreadsheets or ticketing systems for documentation. Integrating AI with this patchwork requires multiple bespoke connectors. MCP abstracts these differences, letting AI call network functions through a single standardized interface.

How the Model Context Protocol Works

MCP follows a client‑server architecture inspired by the Language Server Protocol (LSP). There are four main components descope.com:

- Host application – This is the front‑end interface used by humans, such as Claude Desktop, a custom chatbot, or an AI‑enhanced integrated development environment. The host includes an MCP client to manage connections and translate between AI requests and the protocol.

- MCP client – Bundled with the host application, the client handles the initial handshake, capability discovery, and message routing. It asks each server what functions it exposes and registers them for AI use.

- MCP server – A lightweight program or module that exposes specific capabilities (functions) to the AI. Servers are usually built around a single integration point – for example, one server might provide access to a PostgreSQL database, another to a network management system, and another to a ticketing platform. Developers can run multiple servers to offer a suite of tools.

- Transport layer – The communication channel between client and server. MCP supports two transports: STDIO, for local integrations where the server runs on the same machine as the client, and HTTP+SSE (Server‑Sent Events) for remote connections descope.com. Both transports rely on JSON‑RPC 2.0 as the message format, which defines the structure for requests, responses and notifications.

Function Discovery and Execution

When a user opens an AI client like Claude Desktop, the client starts each configured MCP server and performs a handshake:

- Initial connection – The client connects to the server over STDIO or HTTP.

- Capability discovery – The client sends a “what can you do?” request. The server responds with a list of functions, their parameters and human‑readable descriptions descope.com.

- Registration – The client registers these functions and makes them available for the AI model to call.

During a conversation, when a user asks a question that maps to a registered function, the AI chooses the appropriate tool, fills in the parameters and invokes the function via the MCP server. The server executes the underlying task (e.g., querying SNMP data or running a SQL command) and returns the result. The AI then formats that result into a human‑friendly answer.

Applications of MCP in Networking

MCP is not limited to AI chat interfaces; it has broad potential in network operations and automation:

- Real‑time monitoring and alerting – An MCP server can expose functions for retrieving device status, interface statistics, or error logs. AI assistants can then answer natural‑language queries about network health or proactively notify teams about anomalies.

- Configuration management – Through MCP, an AI can call NETCONF or vendor APIs to push configuration changes or audit compliance. This abstracts away the complexity of different device interfaces and data models.

- Troubleshooting and diagnostics – MCP servers can expose scripts to gather diagnostic information or perform tests like ping, traceroute and throughput measurements. An AI assistant can orchestrate these tests and explain the results.

- Documentation and reporting – By connecting MCP to network documentation systems (e.g., wikis, knowledge bases), AI can generate reports or build KPIs based on real‑time data.

Across these applications, the value comes from contextual awareness. Instead of receiving a static list of metrics, an AI can interpret the data, correlate it with other information (like maintenance schedules or known issues), and provide actionable recommendations.

EyeOTmonitor and the Promise of MCP

EyeOTmonitor is a cloud‑based monitoring platform that manages thousands of devices across wireless ISPs, systems integrators and smart‑city operators worldwide. Beyond simply displaying metrics, the platform provides remote network management, intuitive topology and geospatial maps, real‑time dashboards and a sophisticated device severity engine. For instance, EyeOTmonitor lets you remotely access devices through SSH/Telnet or HTTP/HTTPS without building VPN tunnels, and you can scale by uploading thousands of devices at once eyeotmonitor.com. Its drag‑and‑drop topology maps update in real time so engineers can visualize device connections, open nested sub-maps and view detailed device balloons eyeotmonitor.com, while geospatial maps overlay network topology on real‑world maps with features like traffic overlays, elevation profiles and advanced filtering eyeotmonitor.com. Customizable dashboards deliver real‑time KPIs, historical trends and data‑driven insights eyeotmonitor.com, and the device severity engine assigns meaningful warning and critical levels to properties collected via SNMP, ONVIF or APIs eyeotmonitor.com. These capabilities make EyeOTmonitor an ideal testbed for AI integration.

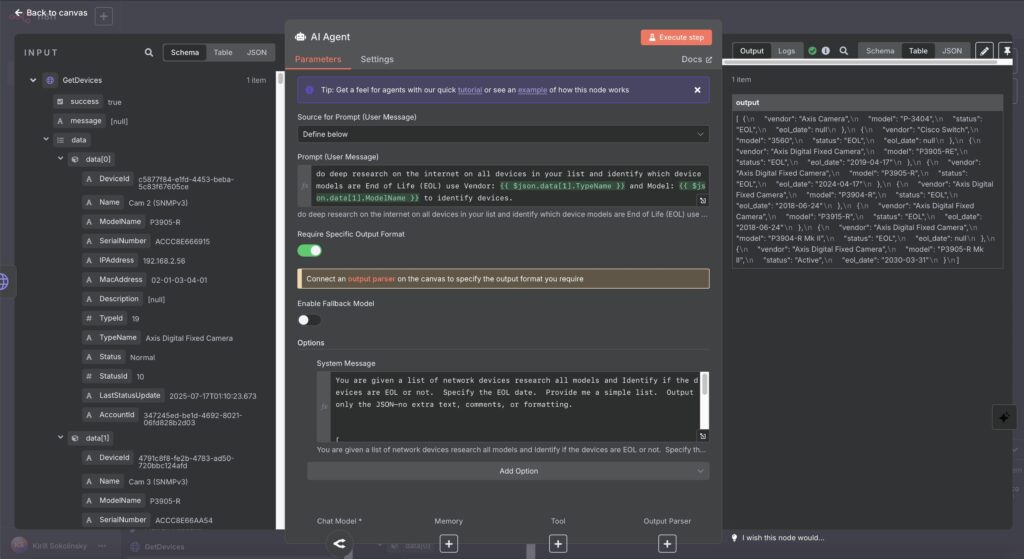

EyeOTmonitor team conducted an experiment to see how MCP could enhance network visibility and automate reporting. The test involved three key components:

- EyeOTmonitor API – EyeOTmonitor’s REST API provides device metadata such as IP address, model, vendor and firmware version. The team used this API to query a customer test account.

- n8n workflow engine – n8n is an open‑source automation platform. The team created an HTTP request node in n8n to call the EyeOTmonitor API and fetch device data. They then added n8n’s built‑in MCP Server Trigger, exposing this workflow as an MCP tool.

- Claude Desktop – As an MCP‑capable host application, Claude Desktop connected to n8n’s MCP server. During the test, the team asked Claude, “How many devices are having problems?” and later “Which devices are end‑of‑life?” Claude used the MCP connection to run the workflow, retrieve data and return a detailed report.

n8n agent rules for communicating with EyeOTmonitor data.

The results were impressive. Without writing any additional code, the team could perform complex queries across thousands of devices and receive structured outputs in plain language. For example, when they asked about end‑of‑life (EOL) devices, Claude produced a report listing each device, its model, firmware version and EOL status. This enabled faster risk assessment and prioritized maintenance. The test also highlighted some areas for improvement—such as the need for data normalization and a dedicated MCP server to handle more advanced functions—which EyeOTmonitor plans to address in future development. This real‑world experiment demonstrates how MCP can deliver tangible benefits in network operations.

Benefits and Challenges of MCP

Benefits

- Standardization – MCP provides a consistent way to describe and invoke functions across different tools and AI models descope.com. This reduces integration efforts and future‑proofs your automation strategy.

- Contextual AI – By exposing rich context (device data, configuration parameters, historical logs) to AI assistants, MCP allows for smarter responses and recommendations. This goes beyond simple keyword matching.

- Flexibility – MCP servers can be written in multiple languages (Python, TypeScript, C#, Java) en.wikipedia.organd deployed locally or remotely. Organizations can choose the transport method (STDIO or HTTP+SSE) that best fits their security and performance requirements descope.com.

- Extensibility – Since each server focuses on a specific integration, you can modularly add new capabilities without affecting existing ones. For example, you might run separate servers for SNMP, NETCONF, your CMDB and a ticketing system.

- Enhanced automation – With AI calling multiple tools, workflows that once required scripting or manual steps can be executed through natural language. This improves productivity and reduces human error.

Challenges

- Security – Exposing functions to AI raises concerns about data privacy and unauthorized access. You must implement proper authentication, authorization and transport encryption. Our security‑focused article (S4) delves into these issues.

- Data normalization – Different systems may return data in inconsistent formats. MCP servers should normalize outputs so that AI models can process them effectively. This was a challenge in the EyeOTmonitor test.

- Early ecosystem – MCP is relatively new; tooling and best practices are still evolving. Organizations should be prepared for changes as the community matures and standards evolve.

- Not a silver bullet – MCP complements rather than replaces existing protocols like SNMP or NETCONF. For some low‑level or high‑throughput tasks, traditional protocols may still be more efficient.

Future Outlook

Adoption of MCP is accelerating. Following Anthropic’s release, OpenAI, Replit and Sourcegraph announced support for the protocol en.wikipedia.org. Google DeepMind confirmed that future Gemini models will be MCP‑aware, calling the protocol “rapidly becoming an open standard for the AI agentic era” en.wikipedia.org. As more tools expose MCP servers, network engineers will gain plug‑and‑play access to an ever‑growing ecosystem of capabilities. Imagine being able to combine device inventory queries with ticket creation, documentation updates and training recommendations—all from a single AI interface.

At EyeOTmonitor, the roadmap includes building a dedicated MCP server that can execute complex network tasks (e.g., firmware upgrades, configuration backups) and return structured results. This will further streamline operations and pave the way for predictive maintenance powered by AI.

Conclusion and Key Takeaways

The Model Context Protocol is poised to transform how networks are monitored and managed. By providing a universal interface between AI models and external systems, MCP solves the NxM integration problem and empowers engineers to ask natural‑language questions that trigger real work behind the scenes. The EyeOTmonitor test case proves that even today, teams can use MCP with existing tools like n8n to automate device queries and generate insightful reports.

Key takeaways:

- MCP eliminates the need for bespoke integrations by standardizing function calls across AI models and tools descope.com.

- The protocol uses a client‑server architecture with JSON‑RPC, supporting both local and remote transports descope.com.

- EyeOTmonitor’s proof of concept showed how MCP can automate device health checks and produce EOL reports without manual data wrangling.

- Adopting MCP requires attention to security, data normalization and evolving best practices, but the potential benefits are significant.

Ready to dive deeper?

Next: learn how MCP compares to SNMP and NETCONF in MCP vs SNMP & NetConf.